At Boston Limited, our dedicated Boston Labs serves as the hub for product testing and development, giving us the ability to trial the latest hardware from across our portfolio of vendors. We don’t just benchmark hardware to verify vendor claims – we also integrate the hypervisor or operating system, software and orchestration layers to replicate real-world configurations in the way our customers would use them.

Proxmox VE is a KVM-based hypervisor that enables the deployment and management of multiple virtual machines through an intuitive web interface. Having been on the market for over a decade, Proxmox has gained significant momentum in recent years, driven by changes in the virtualisation industry and rising costs from other providers. Whether it’s escalating licence fees for existing solutions or bringing workloads back from the cloud, Proxmox VE stands out as a cost-effective, feature-rich and hardware-agnostic platform.

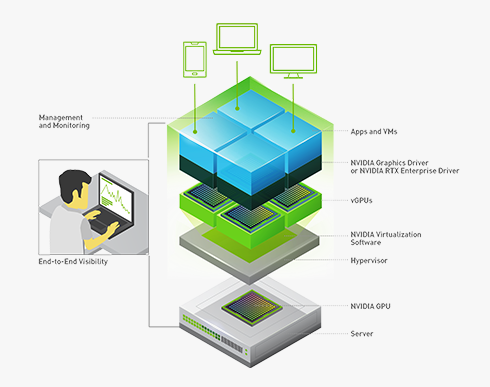

Virtual GPUs (vGPUs) let multiple virtual machines share a single physical GPU, transforming how you deploy graphics and compute intensive workloads. Proxmox VE’s integration of QEMU/KVM with VFIO and NVIDIA’s vGPU drivers makes it straightforward to carve up a GPU into dynamic slices that each VM can consume as if it owned the hardware.

Benefits of vGPU on Proxmox

- Resource Efficiency: By time-slicing or partitioning one GPU across several VMs, you can achieve far higher utilisation compared to dedicating one GPU per VM.

- Cost Savings: Consolidating GPU hardware reduces capital expenditure and power/space overhead in your data centre. No Hypervisor licencing costs, only optional paid support (which also grants access to the enterprise repositories); can also lead to lower overall TCO.

- Multi-Tenant Isolation: Each VM gets its own vGPU profile, ensuring predictable performance boundaries without fully exposing the physical device. Different job schedulers can prioritise ensuring quality of service (avoiding noisy neighbour workloads) or maximised utilisation.

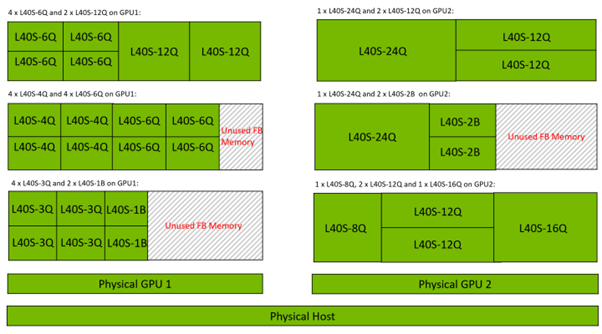

- Dynamic Scalability: You can adjust vGPU profiles (framebuffer size, compute cores) on the fly to match evolving workload demands. As of Version 17, you can now have mixed sizes of vGPU profiles on a single card.

- Simplified Management: Centralised Proxmox tools let you assign, monitor and reconfigure vGPUs from the single pane of glass interface.

Imagine a digital media studio wanting to spin up multiple render nodes. Using bare metal, you may have to dedicate one GPU to that task which may leave it underutilised, especially between workloads. This also extends to pass-through as you would require a separate physical GPU in a system for every VM.

Nvidia’s vGPU technology allows you to split the GPUs vRAM into pieces and separately allocate these to individual VMs. They would allow you to take Nvidia’s RTX PRO 6000 series GPU and slice it into 12, giving each VM 8GB of vRAM/framebuffer whilst sharing the full compute power between all guest VMs. As mentioned, in recent versions you no longer need all profiles to be the same, allowing you to co-locate low-demand 2/4GB profiles alongside a more demanding 16GB profile. This can optimise the utilisation levels of vRAM and compute capacity of each card.

This can be particularly advantageous with virtual workstations. Standard virtual machines have very limited graphics capability and can feel sluggish to use, and struggle to play video. Adding a vGPU profile to a VM adds can add graphical acceleration to a system. For knowledge workers this could mean you only need 1 or 2GB per profile, allowing dozens of people to share a single GPU and other server resources.

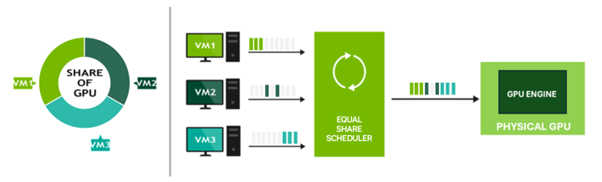

You may need to guarantee the performance of your VMs. The default job scheduler is best-effort/round-robin and gives each vGPU access to the maximum amount of compute resources. While this can provide the greatest GPU utilisation, it can suffer from “noisy neighbour syndrome”, where one VM is especially demanding and impacts the performance of the other VMs sharing that hardware.

Changing the job scheduler to equal share can ensure quality of service to each VM, however this is at the expense of GPU utilisation, and caps the max compute power available to any single vGPU profile to a percentage of the number of vGPUs running (example below would be 33%).

High-Level Implementation Example

Here’s a streamlined view:

- Prepare Host: This has been made even easier by the Proxmox team and just requires you to download and run the pve-nvidia-vgpu-helper tool which automatically enable settings such as IOMMU and blacklists the relevant hypervisor drivers.

- Head over to Nvidia’s enterprise portal and download the vGPU driver package (latest of writing is v18.4) as well as the Nvidia Licence Server.

- Setup a Licence server (can run on a local VM) and allocate your licences to it.

- Install vGPU Drivers.

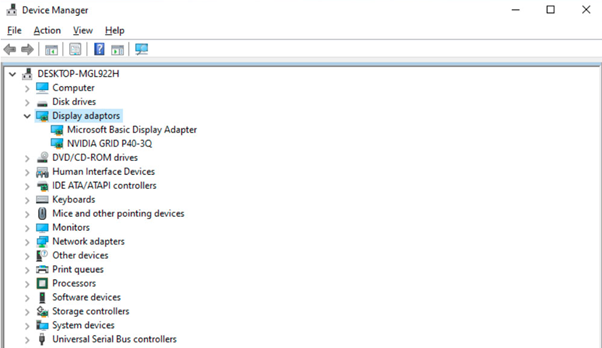

- Define vGPU Profiles: Select a profile (e.g., GRID 3Q for 3GB framebuffer) for each VM in Proxmox’s Hardware → Add → PCIe Device, find the GPU and add the chosen mdev profile.

- Launch & Validate: Start your VM, each assigned the GRID 4Q profile.

- Setup Remote Desktop (RDP or something like Parsec (You will lose access via the console once active and the vGPU is providing graphical output).

- Inside each VM, install the NVIDIA driver and run nvidia-smi to confirm the vGPU allocation.

- Testing: Assign Blender or other GPU-accelerated render queues to the VMs.

- Monitor GPU usage centrally on the Proxmox host with nvidia-smi across all vGPU instances.

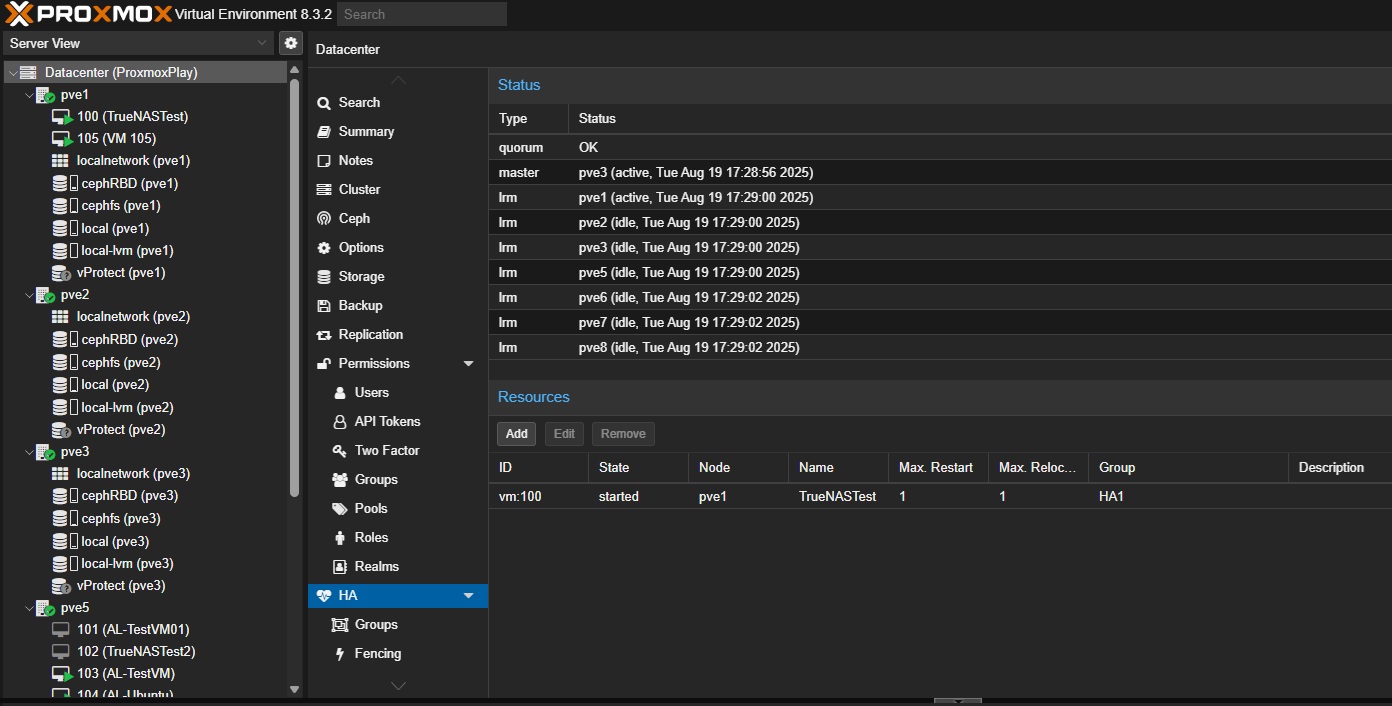

It’s as easy as that. At this point you can look at taking advantage of Proxmox VE’s other features, such as HCI (Hyper Converged Infrastructure) and HA (High availability). With just 3-5 nodes, you can have a cluster of Proxmox hypervisors, managed through a single interface, with scaled-out replicated storage utilising CEPH providing the redundant storage for HA mode. In the event of an entire server going down, the running VMs data is still safe and available, the VMs can spin up on a Proxmox host with spare capacity, with minimal downtime. No need for reserve of vGPU licences, as the licence server only cares about the number of currently online VMs.

I’ve been a long-time user of Proxmox and it has been possible to setup vGPU on Proxmox prior to now with their own KVM drivers, however, this was not officially supported by NVIDIA. Any bugs or technical issues means relying on your own, or the communities help, which made it unattractive as an option for the Enterprise customer that requires robust support mechanisms. With official Proxmox VE support in version 18 of the vGPU driver, this adds a compelling option against some of the more traditional Hypervisors such as VMWare or Nutanix.

At Boston Labs, we have the ability to test and benchmark the latest, greatest hardware and software solutions to ensure we can recommend the right fit for your use case. We can also perform or give access for remote testing so, for example, we could demonstrate the features of Proxmox running on a particular set of hardware. If you are interested in what we can offer, please reach out to your account manager or our sales team to see how we can guide you.

Author

Thomas Mitchell

Field Application Engineer

Boston Limited